The server blips once, then the dashboard freezes. Your APIs stop responding, database connections drop, and suddenly your team scrambles to restore uptime while customer transactions hang in the balance. System outages don’t just inconvenience businesses; they disrupt entire digital operations. Portable cloud environments offer an innovative solution that transforms how you extend and back up local software systems, providing resilient continuity when your core infrastructure fails.

These hybrid systems deliver dual advantages: they strengthen operational reliability during downtime while doubling as portable environments for development, testing, or remote deployment. Advanced capabilities such as containerization provide consistent runtime environments across platforms, while integrated APIs enable seamless synchronization between portable and hosted systems. With frameworks like the DOGE software audit and licensing HUD, you can ensure compliance, visibility, and secure integration across all environments. This guide walks you through everything you need to successfully integrate portable cloud technology with local software infrastructure, from understanding the core architecture to implementing practical synchronization strategies that work both on-premises and on the go.

Key Takeaways

- Portable Cloud Integration enhances operational reliability during outages and allows for development and testing in portable environments.

- Implementing portable cloud systems involves understanding local software backup fundamentals and ensuring sufficient storage capacity and compute throughput.

- Containerization and integrated APIs improve performance and eliminate compatibility issues across environments, ensuring seamless operations.

- Follow a step-by-step integration process, focusing on configuration, security, and monitoring to achieve digital independence.

- Adopting portable cloud integration prepares organizations for resilience and supports a shift towards decentralized software ecosystems.

Table of Contents

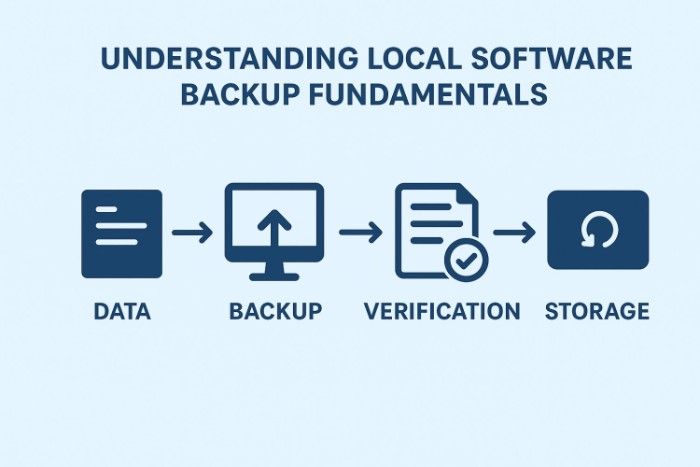

Understanding Local Software Backup Fundamentals

A home battery backup acts as your operational safety net when cloud connectivity fails. These self-contained deployments store code, data, and configurations that automatically activate during network disruptions, ensuring mission-critical services continue to run without user interruption. Unlike traditional backup servers that require manual failover, modern software continuity systems provide instant, seamless failover for critical applications.

Two core specifications define system resilience: storage capacity and compute throughput. Capacity, measured in terabytes, defines total data redundancy while throughput, measured in CPU cores or container instances, determines simultaneous operational load. Most organizations require backup nodes capable of handling 60–80% of regular traffic to maintain critical workloads during outages.

Typical applications include maintaining database access, preserving API functionality, sustaining authentication services, and keeping analytics pipelines operational. A well-provisioned system maintains these essentials for hours or days on limited local resources. It extends them indefinitely when coupled with portable cloud deployments that sync updates and replicate data in real time.

Portable Cloud Systems: Technology and Advantages

How Portable Cloud Integrates with Local Infrastructure

Portable cloud environments are becoming central to how businesses maintain continuity and flexibility. They bridge the gap between local and cloud infrastructures, enabling seamless operations even during outages. According to a Forbes article referencing Gartner, by 2026, three out of four organizations will base their digital transformation strategy on the cloud, underscoring the critical importance of hybrid and portable systems for modern resilience.

These hybrid systems deliver dual advantages: they enhance operational reliability during downtime while also serving as portable environments for development, testing, or remote deployment.

Key Benefits for Operational Resilience

Portable cloud integration eliminates dependency on a single data center or network route. When regional outages occur, distributed nodes continue to handle compute tasks as long as local resources remain available. This autonomy proves invaluable during extended network disruptions. Beyond emergency continuity, hybrid integration reduces latency for remote users and optimizes cloud spending by shifting non-critical workloads to portable nodes during periods of low demand.

Environmentally, edge computing reduces the need for long-distance data transfer and oversized cloud resources, minimizing energy consumption while enhancing responsiveness, a sustainable complement to financial efficiency.

External and Development Applications

The same portable environments that protect uptime also accelerate software delivery. Developers can clone entire cloud stacks onto laptops or edge servers for testing, debugging, or sandboxing without relying on live connections. During offsite work or field operations, deploy local environments that mirror production configurations, ideal for demos, R&D, or secure, offline development.

This dual-use flexibility maximizes ROI: infrastructure purchased for system resilience doubles as an innovation accelerator. Transitioning between environments is seamless. Export your containers, spin them up locally, and redeploy to production with zero compatibility issues.

Critical Features for High-Performance Integration

Containerization and Image Parity

Containerized systems encapsulate runtime dependencies, ensuring identical behavior whether running locally or in the cloud. This double-layered consistency boosts reliability by 15–30% across deployments. The same application image that powers your production environment runs flawlessly on an offline edge server or developer laptop, eliminating “it works on my machine” failures.

System Efficiency and Resource Utilization

Performance efficiency determines how effectively your portable environment uses CPU, memory, and I/O resources. A highly optimized container can deliver 20–30% more throughput per core than a virtual machine, reducing hardware requirements for local redundancy. In space- and energy-constrained environments, lightweight runtimes such as Docker or Podman outperform full hypervisors.

Integrated API Gateways

Modern portable systems include built-in API gateways or service meshes that manage secure communication between portable nodes and cloud systems. These gateways standardize authentication, routing, and telemetry, enabling seamless reconnection and synchronization. The direct API linkage also enhances performance by bypassing redundant middleware, resulting in a 10–15% increase in efficiency.

Portability and Security Factors

Effective portable systems balance lightweight deployment with robust protection. Quality builds weigh in at minimal resource overhead while maintaining encryption, access control, and compliance standards. Look for container signing, encrypted configuration secrets, and identity federation compatibility with your main IAM provider.

Durability also matters; automated snapshotting and checksum validation protect data integrity during transport and offline operation. Built-in monitoring tools ensure stability whether deployed on laptops, edge gateways, or mobile field servers.

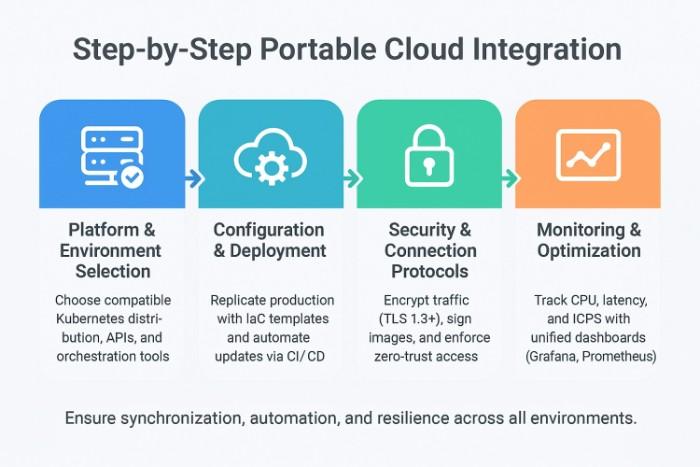

Step-by-Step Integration Process

1. Platform and Environment Selection

Match your portable platform to your core system’s orchestration capabilities. Kubernetes users should select compatible, lightweight distributions such as K3s or MicroK8s. Verify compatibility for APIs, storage drivers, and network configurations.

Create an integration checklist covering:

- Synchronization cadence and method (push/pull, event-driven)

- Data format and schema consistency

- Identity and access management alignment

- Deployment automation tools

- Security and compliance configurations

2. Configuration and Deployment Best Practices

Deploy your local cluster as a replica environment using identical manifests or IaC templates. Automate updates via CI/CD pipelines that mirror production commits into portable environments. Utilize dynamic DNS or load balancers to intelligently route traffic during outages.

Maintain a modular architecture, with stateless services syncing seamlessly, while stateful services use distributed databases or incremental backups for consistency.

3. Security and Connection Protocols

Encrypt all data exchanges using TLS 1.3 or a later version. Implement token-based authentication for portable nodes and sign all deployment images. Avoid hot-swapping running containers without properly locking paused workloads before syncing to prevent corruption.

Use firewalls and zero-trust access controls for nodes operating in public or mobile environments.

4. Monitoring and Optimization

Track system performance through unified observability dashboards (Prometheus, Grafana, or Datadog). Establish baselines for normal operations: CPU, latency, and IOPS, and monitor deviations during failover events.

If resource usage spikes beyond 80%, consider refactoring workloads or redistributing containers. Automate regular updates for dependencies and security patches, even on offline nodes.

Modern solutions like AWS Snowball Edge or Azure Stack Edge include built-in analytics to simplify performance tracking across hybrid architectures.

Portable Implementation Strategies

Edge and field deployments require streamlined operation. Pre-configure your containers and package dependencies into portable bundles that can be deployed to laptops, drones, or IoT gateways within minutes.

For distributed teams or disaster recovery scenarios, keep redundant configuration files in secure, version-controlled repositories accessible offline. Automate provisioning scripts so that new instances can be spun up within seconds of a failure.

Rapid deployment readiness separates resilient organizations from reactive ones. Pre-label configuration templates, document setup sequences, and test recovery drills regularly to ensure readiness under real conditions.

Achieving Digital Independence Through Hybrid Integration

Integrating portable cloud systems with local software infrastructure delivers transformative reliability, protecting operations from downtime while empowering development and edge capabilities.

The dual-use nature of portable environments, resilient backup, and agile workspace maximizes long-term value. Containerized consistency ensures reliable replication across contexts, while API-level synchronization maintains unified distributed systems.

This step-by-step framework shows that building a resilient hybrid software ecosystem requires no deep infrastructure expertise, only a clear understanding of the fundamentals of compatibility, security, and automation.

As container orchestration becomes more accessible and distributed computing costs decline, these systems will evolve from optional tools to standard architecture components. By adopting portable cloud integration today, you’re not just preparing for the next outage; you’re participating in the broader transformation toward decentralized, adaptive, and resilient software ecosystems.