In the last two years, Generative AI has moved from a novelty to a necessity. We see it drafting emails, writing code, and planning travel itineraries. Naturally, the healthcare industry asks: If GPT-4 can pass the Bar Exam and the USMLE (United States Medical Licensing Examination), why can’t we just plug it into our Electronic Health Records (EHR) and let it do clinical diagnosis on patients?

It is a fair question. GPT-4 is a technological marvel. It is incredibly eloquent, broadly knowledgeable, and surprisingly empathetic. However, in the high-stakes world of clinical medicine, being “broadly knowledgeable” isn’t enough. In fact, it can be dangerous.

While General Purpose LLMs (Large Language Models) like GPT-4 are excellent at general reasoning, they lack the specific calibration, safety guardrails, and domain-specific depth required for clinical deployment.

Here is why relying solely on generalist models like GPT-4 falls short for clinical diagnosis, and why the future belongs to specialized medical AI models (like Med-PaLM, Meditron, and MedGemma).

Key Takeaways

- Generative AI is becoming essential, but general models like GPT-4 lack the precision needed for clinical diagnosis.

- Specialized medical AI models, like Med-PaLM and Meditron, offer greater accuracy tailored to healthcare needs.

- General models may fabricate information, while specialized models prioritize safety and clinical accuracy.

- Medical terminology requires nuanced understanding that general models often miss, which specialized models excel at.

- The future of medical AI relies on an ecosystem of specialized tools rather than relying solely on generalist models for clinical diagnosis.

Table of contents

1. The “Swiss Army Knife” vs. The Scalpel

Imagine you are about to undergo a delicate surgery. Would you prefer the surgeon use a Swiss Army Knife, or a specialized surgical scalpel?

The Swiss Army Knife is versatile. It has a blade, a screwdriver, and a pair of scissors. It can do a thousand things reasonably well. This is GPT-4. It is trained on the entire internet—Reddit threads, Wikipedia, coding tutorials, and poetry.

The scalpel, however, is designed for one thing: precision. This represents specialized medical models.

The Data Distribution Problem The core issue lies in the training data. GPT-4’s training set includes medical textbooks, yes, but they are diluted by billions of non-medical parameters. When you ask GPT-4 a medical question, it is predicting the next word based on a probabilistic mix of casual internet conversation and formal medical literature.

Specialized models, such as Med-PaLM (Google) or Meditron (EPFL), are fine-tuned specifically on biomedical corpora, clinical guidelines, and anonymized patient data. They prioritize medical accuracy over conversational flair. When the context is life-or-death, you don’t need a model that can also write a sonnet about the diagnosis; you need a model that adheres strictly to clinical protocols.

2. The Hallucination Hazard in Healthcare

“Hallucination” in AI refers to the model confidently stating a fact that is entirely made up. In creative writing, this is a feature. In medicine, having hallucination in clinical diagnosis is a liability.

General models are optimized for plausibility, not truth. If you ask GPT-4 to cite a study about a specific drug interaction, it might invent a study that sounds real—complete with a fake title, fake authors, and a fake DOI link—because that pattern fits its training data.

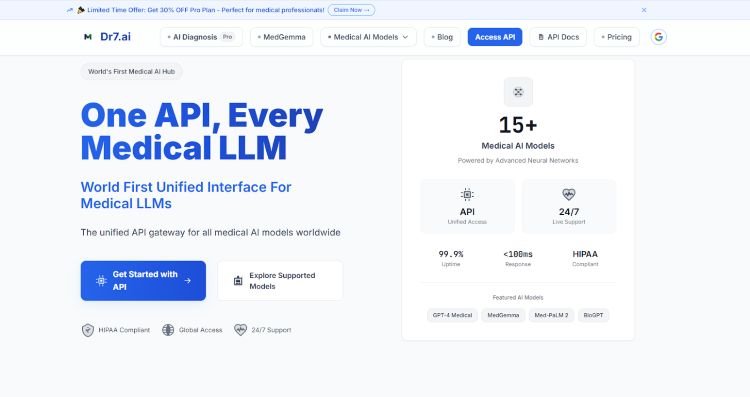

Why Specialized Models are Safer Specialized models are often trained with different reward mechanisms. For example, models available via the dr7.ai ecosystem are often benchmarked against datasets like MedQA and PubMedQA. They are less likely to fabricate citations because their “knowledge universe” is constrained to verified biomedical literature.

Furthermore, newer medical models are being designed to say “I don’t know” or “insufficient data” rather than guessing—a critical safety feature that generalist models often struggle with because they are programmed to be helpful at all costs.

3. Nuance and Medical Terminology

Language is context-dependent. In the general world, the word “unremarkable” might mean boring or unimpressive. In a radiology report, “unremarkable” is the best news a patient can get—it means everything is normal.

General LLMs often miss these subtle, high-stakes linguistic shifts.

- Negation handling: Medical notes are full of negations (“Patient denies chest pain,” “No evidence of fracture”). General models sometimes glaze over these negations when summarizing long texts, leading to the opposite conclusion.

- Acronym Ambiguity: “MS” could mean Multiple Sclerosis, Mitral Stenosis, or Morphine Sulfate depending on the context. A general model might guess based on internet popularity; a medical model infers based on the surrounding clinical data.

Models like BioGPT (Microsoft) and Clinical Camel are specifically trained to understand the syntax and semantics of biomedical text, ensuring that “positive” (test result) isn’t confused with “positive” (emotion).

4. The Privacy Elephant: HIPAA and Data Security

This is the biggest hurdle for enterprise adoption. The standard version of ChatGPT (and many other consumer LLMs) retains data to train future models. If a doctor inputs patient data into a public interface, they are likely violating HIPAA (Health Insurance Portability and Accountability Act) regulations.

While enterprise versions of GPT-4 exist, they are often expensive and act as “black boxes.”

The Dr7.ai Approach Specialized medical AI platforms are built with compliance as the foundation, not an afterthought. When you use an API gateway dedicated to medical AI:

- Data Segregation: Processing happens in environments designed for Sensitive Health Information (SHI).

- No Training on Client Data: Professional medical APIs guarantee that inputs are not used to train the base model.

- Local Deployment Options: Some specialized open-source models (like Meditron 70B) can be hosted within a hospital’s private cloud, ensuring data never leaves the premises.

5. Cost and Latency: Why Burn a Forest to Light a Candle?

GPT-4 is a massive model (rumored to be over a trillion parameters). Every time you query it, you are engaging massive computational resources. This results in higher costs per token and slower response times.

For a hospital processing millions of patient records daily, or a startup building a real-time triage chatbot, cost and speed matter.

specialized models often achieve better performance on clinical diagnosis and other medical tasks with fewer parameters.

- MedGemma-27B might outperform GPT-4 on specific clinical reasoning tasks while being significantly cheaper to run.

- CheXagent is optimized specifically for X-rays. Using GPT-4 Vision for X-rays is overkill and often less accurate than a model built solely for radiology.

By using a specialized model, you are paying for medical expertise, not for the ability to write Python code or translate French poetry.

6. The “Second Opinion” Ecosystem

The argument isn’t necessarily that GPT-4 is useless in medicine. It is that it shouldn’t be the only tool, nor the primary diagnostic engine.

The future of Medical AI isn’t a single monolith model; it is an ecosystem of specialized agents. This is the philosophy behind Dr7.ai.

- You use BioGPT to mine research papers.

- You use CheXagent to analyze the patient’s scans.

- You use Med-PaLM or MedGemma to synthesize the clinical history.

This “ensemble approach” mimics a real hospital. You don’t ask the General Practitioner to perform brain surgery; you refer the patient to a Neurosurgeon. Similarly, developers and healthcare providers should route their queries to the model best suited for the specific medical task.

Conclusion: The Right Tool for the Job

We are in the golden age of AI, and the temptation to use the biggest, most famous model for everything is strong. But medicine demands more than general competence; it demands specific excellence.

GPT-4 is an incredible generalist tool that has changed the world. But for clinical diagnosis—where accuracy, privacy, and specific terminology are non-negotiable—specialized medical models are superior. They offer the depth of a specialist, the safety of a compliance-first architecture, and the efficiency of a purpose-built tool.