Artificial intelligence has become a defining force in modern healthcare. From image analysis to predictive monitoring, AI promises faster insights and more personalized care. However, when AI becomes part of a medical device, the regulatory conversation changes. Can rules designed for static hardware truly govern software that learns, adapts, and evolves?

This question sits at the heart of today’s regulatory challenge. Medical devices have always been regulated with safety and performance in mind, yet AI introduces behaviors that are harder to freeze, test once, and approve forever. Today, over 97% of AI-enabled medical devices are cleared through the FDA’s 510(k) pathway, a process built to assess substantial equivalence to existing products, raising serious questions about whether traditional approval models are equipped to govern adaptive, continuously learning systems. Understanding why this matters requires rethinking how risk, evidence, and oversight are applied.

Table of contents

- Why Traditional Medical Device Regulations Fall Short for AI

- How AI Changes the Concept of Intended Use

- Software That Evolves: The Challenge of Continuous Learning

- Risk Management When Algorithms Make Decisions

- Clinical Evidence in AI-Based Medical Devices

- Human Oversight and Trust in AI-Driven Devices

- Post-Market Surveillance in the Age of AI

- Toward a Lifecycle-Based Regulatory Approach

Why Traditional Medical Device Regulations Fall Short for AI

Traditional medical device regulation assumes stability. A device is designed, validated, manufactured, and then used largely as approved. Changes are controlled, documented, and assessed before release. This model works well for mechanical and electrical systems.

AI challenges that assumption. Even when models are locked at release, their performance depends heavily on data, context, and user interaction. A diagnostic algorithm trained on one population may behave differently when exposed to another. “AI medical device innovation is global, though not evenly distributed. About 51.7% of submissions originate from North American companies, with European and Asian developers contributing significant shares. This geographic concentration underscores where regulatory frameworks, such as the FDA’s 510(k) pathway, are being tested most intensively.

The device itself has not changed, but its outputs have. Regulators are therefore confronted with a familiar framework facing unfamiliar behavior.

How AI Changes the Concept of Intended Use

Intended use has long been a cornerstone of medical device regulation. It defines what a device is supposed to do and under which conditions. But how do you describe intended use when an algorithm identifies patterns rather than executes predefined steps?

Consider an AI system that supports clinical decision-making. Is its intended use to provide recommendations, highlight risks, or influence diagnosis? And how much reliance is expected from the clinician?

These questions are no longer academic. They directly affect classification, clinical evidence requirements, and risk controls. AI forces manufacturers to define intended use with greater precision and humility.

Software That Evolves: The Challenge of Continuous Learning

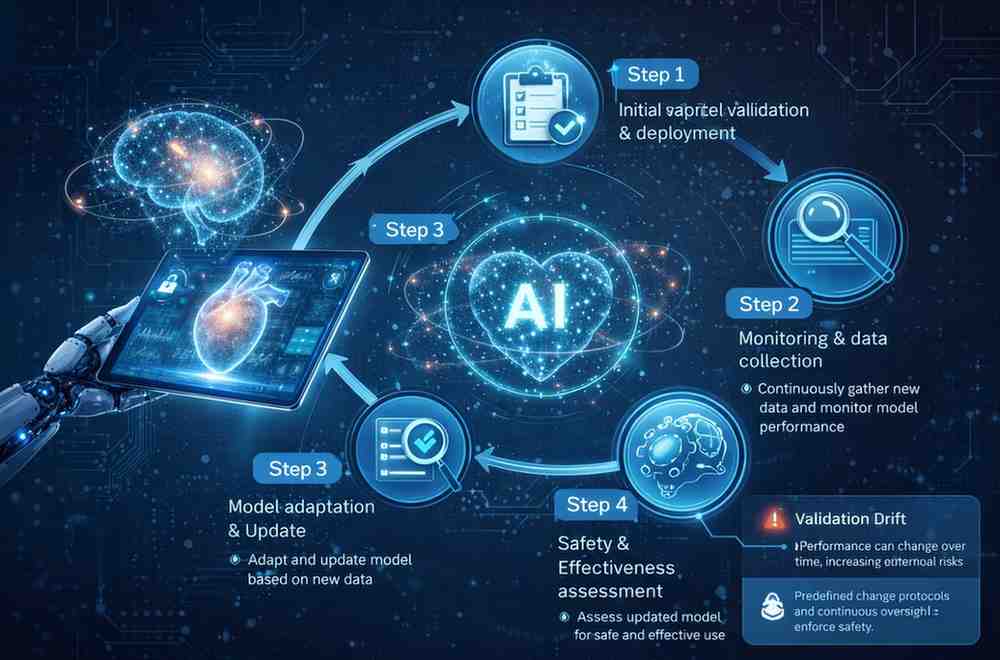

One of AI’s strengths is its ability to improve over time. Yet, from a regulatory perspective, change is a risk. Continuous learning systems raise concerns about validation drift. If a model adapts after deployment, how can manufacturers ensure it remains safe and effective?

This is why regulators increasingly emphasize lifecycle control rather than one-time approval. Performance monitoring, controlled updates, and predefined change protocols become essential.

The focus shifts from proving that the device was safe at launch to proving that it remains safe throughout its use.

Risk Management When Algorithms Make Decisions

Risk management for traditional medical devices often relates to physical failure. With AI, risk moves into the realm of decision-making. Bias, false positives, false negatives, and a lack of explainability can all lead to clinical harm.

Imagine an AI triage system that consistently underestimates risk in certain patient groups. The device may function exactly as designed, yet still contribute to unsafe outcomes.

This is why risk management for AI-enabled devices must extend beyond technical failure and address data quality, representativeness, and transparency. Regulations increasingly require manufacturers to identify and manage these algorithmic risks as rigorously as hardware hazards.

Clinical Evidence in AI-Based Medical Devices

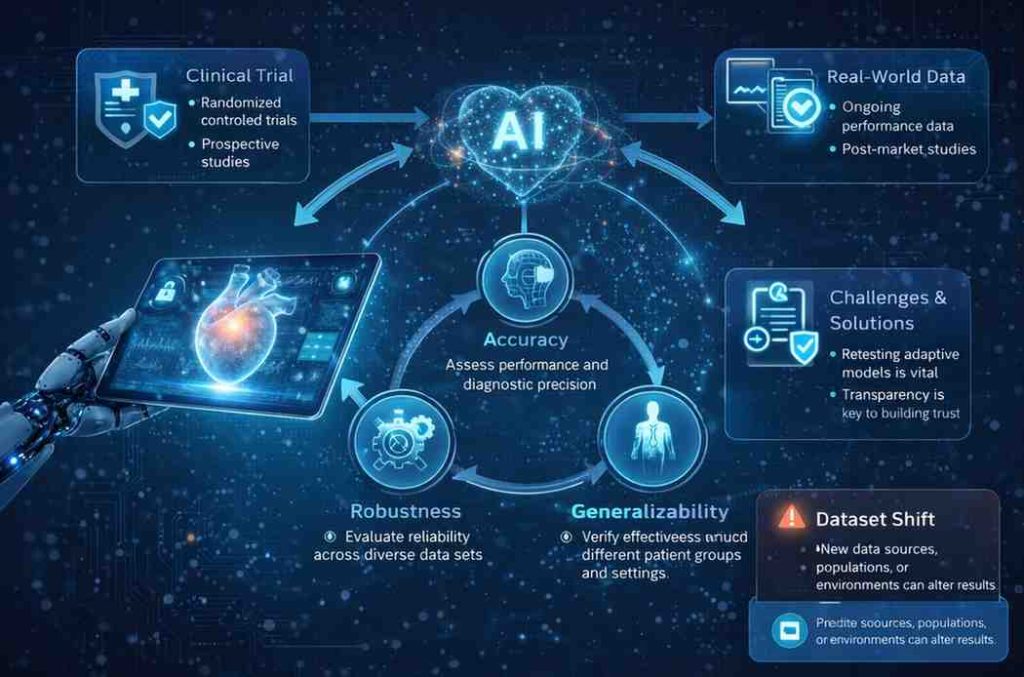

Clinical evidence remains central, but its nature changes with AI. Traditional trials may demonstrate performance at a single point in time, while AI systems demand evidence of consistency across datasets, environments, and user groups.

Clinical evaluation must therefore consider not only accuracy, but also robustness and generalizability. Real-world data is playing an increasingly important role, complementing pre-market studies. This shift reinforces the idea that clinical evidence for AI is not a snapshot, but a continuous process.

Human Oversight and Trust in AI-Driven Devices

AI does not operate in isolation. Clinicians remain responsible for patient care, which raises an important question: how much should they trust the algorithm?

Usability and transparency become critical. If clinicians do not understand how an AI system supports decisions, they may either over-rely on it or ignore it altogether. Both outcomes are risky. Regulations increasingly emphasize human oversight, ensuring that AI supports rather than replaces professional judgment.

Post-Market Surveillance in the Age of AI

Post-market surveillance has always mattered, but AI elevates its importance. Performance drift, emerging biases, and cybersecurity vulnerabilities may only become visible after widespread use.

Manufacturers are expected to actively monitor AI behavior in the field, investigate signals, and update risk assessments accordingly. This reinforces a lifecycle-based regulatory mindset, where learning continues long after market entry.

Toward a Lifecycle-Based Regulatory Approach

AI forces regulators and manufacturers to accept that safety is not a static achievement. Approval is no longer the finish line, but a checkpoint. Continuous oversight, adaptive controls, and ongoing evidence generation are becoming the norm.

This does not weaken regulation. On the contrary, it strengthens it by aligning oversight with how AI actually behaves. A different regulatory mindset is not a concession to innovation, but a requirement for responsible adoption.