As artificial intelligence continues to reshape digital experiences, one of its most fascinating frontiers lies in sound engineering. In an era dominated by remote work, streaming, and voice-driven applications, clear and natural audio has become essential. Poor sound quality doesn’t just disrupt communication; it undermines productivity, entertainment, and accessibility.

Today, AI-powered noise cancellation software is redefining how digital systems understand, separate, and enhance sound. By applying advanced machine learning and signal processing algorithms, developers are creating software that adapts to real-world acoustic environments in real time with minimal human input.

Table of contents

What Is AI Noise Cancellation Software?

AI noise cancellation software uses deep learning models trained on vast datasets of environmental noise such as traffic, wind, mechanical hums, and human chatter. Unlike traditional noise reduction filters that rely on fixed frequency suppression, AI-based systems analyze sound contextually.

The algorithm detects and differentiates between foreground signals (like human speech) and unwanted background noise, then reconstructs the clean signal through real-time adaptive filtering. The result is natural-sounding, low-latency audio that preserves clarity while intelligently muting distractions.

These models are commonly built using frameworks like TensorFlow, PyTorch, or ONNX, and optimized for on-device inference via TensorFlow Lite or Core ML for mobile platforms. Developers and audio engineers can explore AI-driven sound applications through innovative platforms such as store.boyamic.com, which showcases how intelligent software can redefine audio precision.

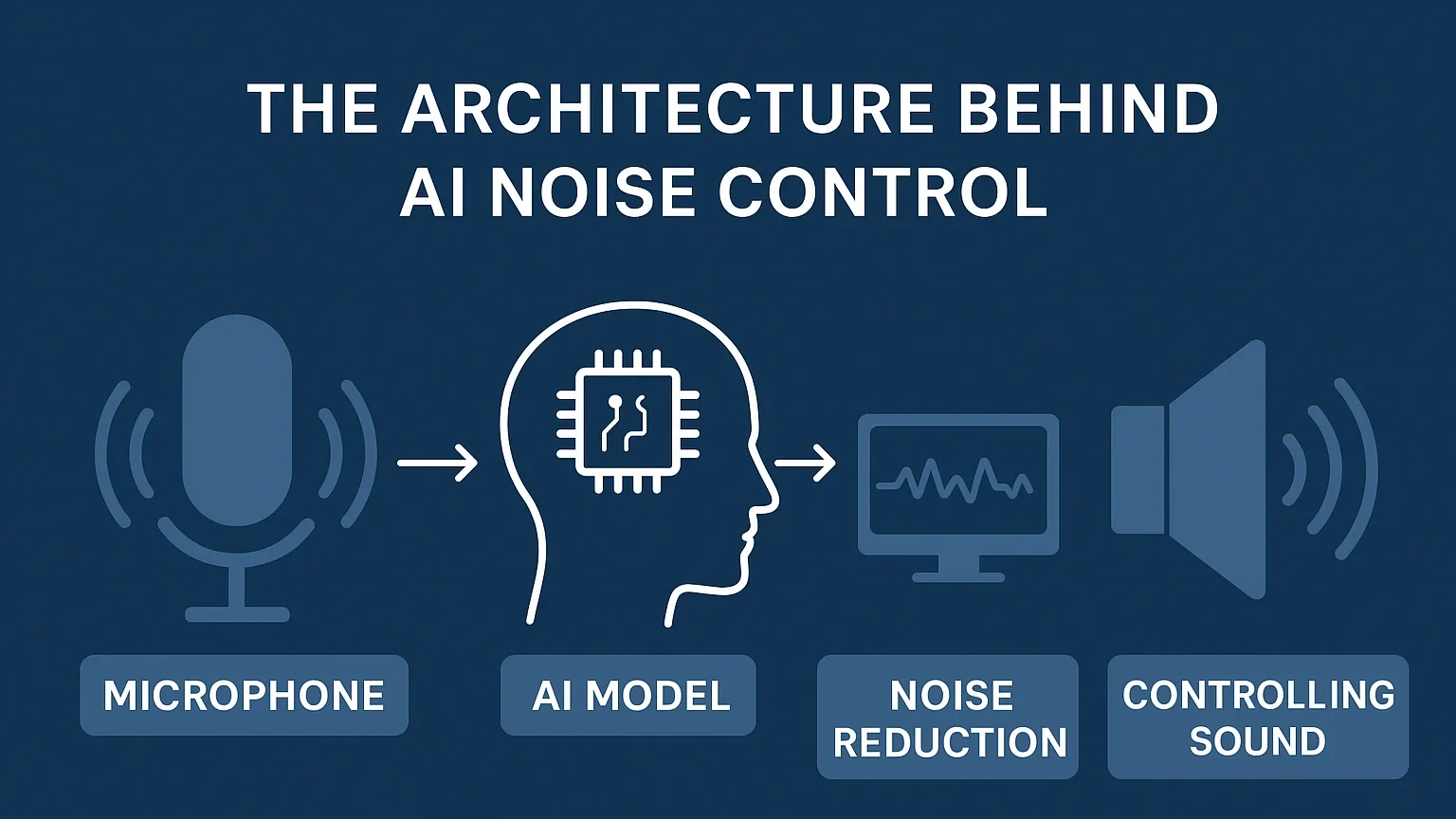

The Architecture Behind AI Noise Control

Modern AI noise suppression software typically combines:

- Acoustic Feature Extraction: Using digital signal processing (DSP) techniques, the system converts raw audio into spectrograms or Mel-frequency cepstral coefficients (MFCCs) representations that the model can interpret.

- Neural Network Analysis: Deep learning architectures, often recurrent neural networks (RNNs) or transformers, process the time–frequency data to identify and isolate noise signatures.

- Adaptive Filtering Layer: The software applies dynamic spectral subtraction or Wiener filtering guided by AI predictions, ensuring minimal voice distortion.

- Real-Time Inference and Feedback: Using low-latency pipelines, the software continuously refines its parameters based on input feedback, ensuring consistent clarity in changing environments.

This architecture allows the system to learn not just from predefined data but also from real-world audio patterns, achieving a level of context awareness that traditional DSP methods cannot match.

AI Models That Learn and Adapt

The power of AI-based audio processing lies in continuous learning. Through supervised learning, models are trained with paired datasets, one noisy sample and one clean sample. Over time, they learn to predict what a “clean” signal should sound like.

More advanced implementations use self-supervised or reinforcement learning to enhance model flexibility. These approaches enable systems to adapt dynamically to new noise types without manual retraining.

Software developers now deploy lightweight neural models that can run efficiently on local devices (edge computing), reducing latency and preserving privacy, since audio data doesn’t need to leave the device.

Software Integration and Application Layers

AI noise cancellation software is increasingly being embedded across various platforms, often as part of broader audio processing SDKs or APIs. Video conferencing platforms, for instance, are integrating AI models through WebRTC extensions to deliver real-time noise reduction and enhanced voice clarity. Similarly, streaming applications now embed neural DSP plugins to optimize sound quality dynamically, ensuring consistent performance across different listening environments. Even operating systems have begun implementing AI-based voice isolation frameworks that intelligently distinguish human speech from ambient noise.

For developers, these capabilities can be integrated using libraries such as RNNoise, DeepFilterNet, or the Krisp SDK, depending on the desired level of customization, processing power, and latency tolerance. While cloud-based APIs enable large-scale training and deployment of these models, the current trend leans toward on-device AI inference. This design choice balances performance efficiency with stronger user privacy.

Performance Optimization Through Software

Running AI audio models efficiently is a challenge that software engineers address through:

- Quantization and Pruning: Compressing large neural networks to reduce compute demand.

- Parallel Processing: Using GPU or NPU acceleration for real-time performance.

- Dynamic Model Switching: Automatically scaling the complexity of models depending on CPU load or network conditions.

These optimizations ensure high-quality sound without draining computational resources a critical balance in software-driven ecosystems where responsiveness is key.

Expanding Use Cases for AI Noise Suppression

The applications of AI noise cancellation software extend far beyond consumer audio:

- Enterprise communication platforms use AI suppression to enhance meeting clarity.

- Streaming services and podcast tools integrate it to produce studio-quality audio in real time.

- Accessibility software applies it for more precise transcription and speech recognition.

- Automotive and AR/VR systems use adaptive AI filtering to manage ambient sound dynamically.

This shift demonstrates how AI audio frameworks are becoming core components of modern software ecosystems, not just add-on features.

Conclusion

AI noise cancellation has evolved from a simple sound filter into a sophisticated software intelligence system capable of learning, adapting, and optimizing in real time. Its integration across apps, SDKs, and cloud platforms marks a significant leap in applied artificial intelligence and digital signal processing.

For developers, it represents a growing frontier: transforming raw acoustic data into context-aware, human-centric experiences. As AI frameworks continue to mature, the line between noise and clarity is no longer defined by hardware; it’s written in code.