The tech world is currently gripped by a fever dream, and its name is Apple Silicon. With the release of the Mac Mini M4, YouTube channels, influencers, and tech blogs are flooding the internet with headlines declaring it the “Ultimate AI Machine” or the “Budget King for LLMs.”

On paper, the proposition is undeniably seductive. You get a sleek, beautiful aluminum box, the legendary power efficiency of the M4 chip, and the promise of Apple’s Unified Memory Architecture. The idea that a $600 machine can load massive Language Models (LLMs) locally feels like the democratization of AI. It feels like the future.

But if you scratch beneath the surface of the marketing gloss, the reality is far more complicated. For serious AI enthusiasts, developers, and especially those looking to deploy the next generation of Autonomous Agents, the Mac Mini M4 is often a false good idea. It is a gilded cage, shiny and impressive, but ultimately restrictive, surprisingly expensive, and structurally unsuited for the future of automation.

That conclusion is not just personal skepticism. AI is reshaping cloud infrastructure in 2026, with companies moving away from simple CPU or GPU comparisons toward more nuanced, scalable infrastructure choices tailored to real workloads. In that context, the idea that a fixed, consumer-grade machine represents the future of AI deployment starts to fall apart.

Before you drop $1,500+ on a hardware configuration that you will be stuck with forever, it is time to look at why a dedicated Virtual Private Server (VPS) is not just a valid alternative, but the only viable strategy for serious agent deployment.

Table of contents

- 1. The “Unified Memory” Trap: The Hidden Cost of Apple AI

- 2. From Chatbots to Agents: The Paradigm Shift

- 3. The ClawdBot Case Study: Deployment vs. Tinkering

- 4. The Heat, The Noise, and The Wear

- 5. Data Privacy: The “Local” Myth vs. VPS Sovereignty

- 6. The Software Ecosystem: The Linux Supremacy

- Conclusion

1. The “Unified Memory” Trap: The Hidden Cost of Apple AI

The biggest selling point of the Mac Mini for AI is the Unified Memory. Unlike a traditional PC architecture, where the CPU, RAM, and GPU VRAM are separate, Apple Silicon shares a single pool. This means if you buy a Mac with 24GB or 32GB of RAM, the GPU can technically access almost all of it to load model weights.

This sounds amazing until you look at the price tag and the cold reality of model sizes.

The Apple Tax vs. Commodity Hardware

Apple’s memory pricing is notoriously exorbitant. The base model Mac Mini M4 is affordable, but it comes with 16GB of RAM, barely enough to run the operating system and a web browser, let alone a quantized 70B parameter model alongside your daily tasks.

To make the machine viable for serious AI work, you need to upgrade.

- Want 32GB? That’s an immediate $400 markup.

- Want 64GB? You are now pushing into Mac Studio territory, costing upwards of $2,000.

In the world of VPS and cloud computing, memory is a commodity, not a luxury good. You can rent a server with 64GB of RAM and high-performance NVMe storage for a fraction of the cost of a single Apple upgrade tier. When you buy a Mac Mini, you are paying a huge premium for the potential to run AI, whereas with a VPS, you pay only for the performance you actually use.

2. From Chatbots to Agents: The Paradigm Shift

This is the most critical point that hardware reviewers miss. They test the Mac Mini by opening a chat window, asking a question, and measuring the number of tokens per second it generates. They treat the AI as a toy or a replacement for a search engine.

The problem is that real AI systems fail for very different reasons. Industry analysis shows that up to 95% of AI projects fail to deliver on their promises, not because models are slow, but because of deeper systemic issues such as data pipelines, architectural limitations, integration complexity, and long-term scalability. Tokens per second tell you almost nothing about whether an AI system can survive real workloads, persistent memory, agent orchestration, or production deployment. Optimizing for benchmark demos instead of system design is how teams end up with impressive prototypes and unusable products.

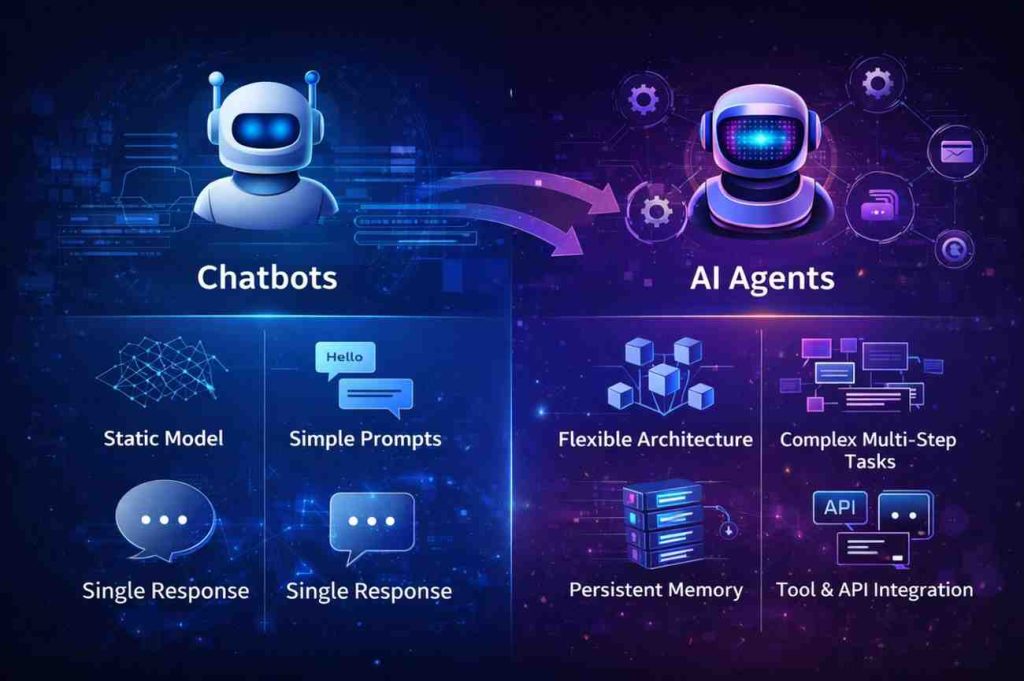

But “Chatting” is the AI of 2023. The AI of 2025 and beyond is about Autonomous Agents.

What is an Agent?

Unlike a chatbot that sits and waits for you to type “Hello,” an Agent is a program designed to work for you, autonomously, continuously, and proactively.

- Chatbot: You ask for a recipe, and it gives you a recipe.

- Agent: You tell it, “Find me the best leads on Twitter for my business,” and it spends the next 48 hours scraping, analyzing profiles, filtering data, and generating reports.

Tools like ClawdBot represent this shift. They connect to the internet, interact with APIs, manage databases, and execute complex workflows that can take hours or days to complete.

Why the Mac Mini Fails at Agents

This shift from passive chat to active agents changes the hardware requirement entirely. An agent like ClawdBot is not a “burst” workload; it is a “sustained” workload.

- The “Always-On” Requirement: An agent is useless if it sleeps. To run ClawdBot effectively, your machine needs to be online 24/7/365. Do you really want your personal Mac Mini, the one you use for Netflix, email, and photo editing, to never sleep?

- macOS Process Management: Apple’s macOS is aggressive about saving energy. It loves to kill background processes or throttle them when the screen is off. Keeping an AI agent alive on macOS requires fighting the operating system itself (using tools like caffeinate or amphetamine), which is not a stable production environment.

- Network Reliability: Your home fiber connection is great for streaming, but it is not enterprise-grade. If your IP changes (which happens often with residential ISPs) or your router resets, your Agent crashes.

3. The ClawdBot Case Study: Deployment vs. Tinkering

Let’s look specifically at a tool like ClawdBot. This is a sophisticated piece of software designed to leverage AI for automation. It requires a stable environment, specific dependencies, and continuous internet access.

Running such a tool on your Mac Mini is “tinkering.” You open a terminal, run the bot, watch it work for 10 minutes, then close it to play a video game. That is not deployment. Deployment means the bot is a worker that lives in the cloud, completely independent of your personal device.

This is where the workflow changes. Smart developers realize that instead of keeping a terminal window open on their desktop for days, they should simply learn how to deploy ClawdBot on VPS to guarantee stability. By moving the workload to a remote server, you ensure that the agent continues to perform its tasks, scraping, analyzing, and interacting, even if you shut down your laptop and go on vacation.

The difference is night and day:

- Local Mac: You are the babysitter. You have to ensure the Mac doesn’t sleep, the Wi-Fi doesn’t drop, and the RAM doesn’t overflow.

- VPS Deployment: You are the manager. You set it up, you disconnect, and the work gets done.

4. The Heat, The Noise, and The Wear

We need to talk about hardware longevity. Apple Silicon is efficient, but running LLMs is mathematically intense. It pushes the processor’s logic gates to their limits.

If you use a Mac Mini as a dedicated server for an AI agent:

- Thermal Stress: While the M4 runs cool, sustained 100% load (which happens during long inference chains or data processing) creates heat. Over time, this heat degrades components, dries out thermal paste, and shortens the SSD’s lifespan by increasing swap memory usage.

- The “Fan” Factor: Do you want a computer humming in your living room or office 24/7? Even a quiet fan becomes an annoyance when it never stops.

A VPS lives in a data center. The cooling is industrial. The noise is not your problem. The hardware degradation is the provider’s responsibility, not yours. If a server fails, they migrate you to a new one. If your Mac Mini burns out because you treated it like a server, you are out $1,500.

5. Data Privacy: The “Local” Myth vs. VPS Sovereignty

One of the main arguments for the Mac Mini is privacy. “I don’t want to send my data to big tech APIs,” people say. And they are absolutely right to be concerned. However, there is a dangerous misconception that “Local Hardware” is the only way to achieve privacy. When you rent a VPS from a reputable provider, you are renting a private slice of infrastructure. You have root access. You control the firewalls. You can encrypt the disk.

In many ways, a VPS dedicated to AI is safer than a personal Mac Mini. Why? Because of Data Hygiene. On your Mac, your experimental AI agents share the same hard drive as your tax returns, your family photos, and your saved passwords. One vulnerability in an open-source AI library (and there are many) could expose your personal life. By deploying on a VPS, you practice Compartmentalization. The AI lives in a sandbox. If the sandbox is compromised, your personal life remains untouched. You achieve total data sovereignty without risking your personal digital identity.

6. The Software Ecosystem: The Linux Supremacy

While Apple has made strides with MLX (their machine learning framework), the uncomfortable truth remains: The native language of AI is Linux. Most open-source projects, vector databases (such as Chroma or Qdrant), and agentic frameworks are built with Linux as their primary platform. Running them on macOS often involves:

- Dealing with slow Docker performance (since Docker on Mac runs inside a Linux VM anyway).

- Waiting for “Metal” ports of popular libraries.

- Debugging obscure “mach-o” errors that the rest of the developer world doesn’t see.

If you are trying to deploy sophisticated tools, you want to be where the development is happening. A Linux VPS gives you the native environment that these tools were built for. Python environments are easier to manage, dependencies install cleanly, and you have access to the full power of the server-grade command line.

Conclusion

The Mac Mini M4 is a triumph of consumer engineering. It is an excellent computer for video editing, coding, and general use. But for the specific, resource-intensive, and “always-on” demands of Autonomous AI Agents, it is a bottleneck.

It locks you into a fixed amount of RAM, forces you to run a desktop operating system for server tasks, and exposes your personal hardware to rapid degradation.

If your goal is to actually use AI to deploy agents that work for you while you sleep, generate value, and automate your life, then the cloud is where you belong. The flexibility, power, security, and cost-effectiveness of a VPS offer a smarter path forward. Don’t let the hype cycle trick you into buying a deprecated future. Build on infrastructure that scales.