AI red teaming tools continue to play a vital role in testing and improving the reliability, safety, and fairness of modern artificial intelligence systems. As organizations increase their use of AI in critical operations, structured testing methods become essential to uncover weaknesses before they can cause harm. Understanding which tools provide the most effective combination of automation, rigor, and compliance allows teams to strengthen their AI systems with confidence.

The best tools of 2026 build on lessons from earlier platforms, offering better integration, scalable infrastructure, and support for both open source and commercial use cases. They help teams simulate real-world attacks, identify risks, and verify that models act as intended under pressure. This article explores leading solutions and the key factors that set specific tools apart in the evolving field of AI red teaming.

Table of contents

- Promptfoo

Promptfoo is an open-source tool that helps developers test, evaluate, and secure large language model (LLM) applications. It focuses on AI red teaming, enabling users to detect vulnerabilities such as prompt injection, personal data exposure, and policy compliance risks. The tool’s design supports both local execution and cloud integration, enabling teams to control performance and privacy.

It works as a command-line interface and library, making it accessible for developers who want to integrate testing into continuous development processes. Teams can define test scenarios and compare model responses across providers like OpenAI, Anthropic, and Meta. This structured approach helps identify weaknesses early in the development cycle.

Promptfoo’s testing framework encourages test-driven development of LLMs. Its declarative configuration makes it easy to automate evaluations in pipelines or CI/CD workflows. For organizations building AI agents or retrieval-augmented generation systems, it provides a practical, repeatable way to measure and improve model behavior.

- PyRIT

PyRIT, short for Python Risk Identification Tool, is Microsoft’s open-source framework for red teaming generative AI systems. It supports security teams and machine learning engineers by automating tests that expose vulnerabilities in large language models and other AI applications.

The tool helps users simulate and track attack scenarios, measure model resilience, and identify potential risks in model responses. By providing structure to testing workflows, PyRIT allows teams to perform consistent evaluations rather than ad hoc experiments.

Because PyRIT is an open automation framework, it integrates with various data sources and models, from cloud environments to smaller, local versions. Its modular design makes it adaptable to multiple use cases and security standards.

The community-driven nature of PyRIT encourages collaboration on secure AI practices. This openness helps organizations reduce development time while maintaining a focus on responsible AI deployment.

- Mindgard

Mindgard provides automated AI red teaming that helps organizations identify and fix weaknesses in their models. It simulates realistic attack scenarios to test how systems respond under pressure. This approach allows teams to detect issues before malicious actors can exploit them.

The platform focuses on securing AI throughout its lifecycle, covering model training, deployment, and runtime environments. It helps uncover hidden or “shadow” AI systems that may bypass regular security checks. Continuous testing ensures that defenses improve as threats evolve.

Mindgard’s technology integrates adversarial testing, prompt injection detection, and protection against manipulation. These features make it worthwhile for companies to use large language models and other generative AI tools. Its design emphasizes automated analysis and risk reduction over manual testing.

Organizations seeking to strengthen their AI ecosystem can use Mindgard to safeguard data and maintain trustworthy systems. Mindgard is one of the top red teaming tools because it aligns with modern security standards and addresses vulnerabilities that traditional application security methods often miss.

- Garak

Garak is an open-source framework built for red teaming large language models and AI agents. It focuses on identifying weaknesses that could lead to undesired behavior or security risks. Written in Python, the tool has grown in popularity among researchers and engineers who assess model robustness.

It uses automated probing and adaptive attacks to test responses across many categories, including safety, reliability, and bias. The system can simulate harmful or misleading prompts to see how models react. By doing so, it helps teams uncover issues that might not appear during routine testing.

Developers value Garak’s modular design and plugin-based architecture. Users can extend its capabilities to fit different testing goals or integrate it with their existing workflows. Its “auto red-team” function can even run multiple attack scenarios without manual intervention.

Because it is open-source, Garak benefits from community input and regular updates. That collaboration makes it adaptable to evolving model architectures and emerging risk areas. Organizations often use it to perform continuous assessments and strengthen their AI security posture.

- FuzzyAI

FuzzyAI is an open-source tool built to test the robustness and safety of AI systems through automated fuzzing and prompt testing. It helps teams examine how large language models and other AI systems respond to unpredictable or malicious inputs. The tool’s goal is to identify weak points that could lead to inaccurate, biased, or unsafe behavior.

It supports integration with various large-language-model frameworks, enabling researchers and developers to run systematic tests with minimal setup. FuzzyAI can simulate attacks and stress test inputs at scale, making it useful for both security and quality assurance teams.

The platform includes logging, scoring, and result visualization to track vulnerabilities and evaluate mitigation measures. Researchers can use these insights to harden their models before deployment. Its straightforward interface and automation features make it suitable for both small research groups and enterprise AI teams focused on responsible model development.

- promptmap2

Promptmap2 is an open-source AI red-teaming tool designed to test how language models respond under varied, sometimes adversarial conditions. It focuses on mapping model behaviors across different prompt types, revealing weaknesses and inconsistencies that might otherwise go unnoticed.

The tool supports structured testing workflows, making it useful for researchers and developers conducting systematic evaluations. Users can define prompts, categorize responses, and compare results between different model versions. This helps teams track improvements or regressions in safety and reliability.

Promptmap2 also integrates with other analysis frameworks to standardize reporting and ensure reproducibility. Because it is open source, the community can extend its features or adapt it to new testing requirements. These qualities make it a practical choice for organizations seeking transparent, repeatable AI red teaming processes.

- Microsoft AI Red Teaming Agent

The Microsoft AI Red Teaming Agent helps organizations test the safety of generative AI systems during design and deployment. It works within Azure AI Foundry and automates vulnerability identification across prompts, responses, and model behavior.

It uses Microsoft’s open-source Python Risk Identification Tool (PyRIT) to run systematic evaluations. This combination allows teams to simulate adversarial interactions that expose risks, such as harmful or biased outputs.

The agent provides structured scoring and reporting to guide developers toward risk-mitigation strategies. Its automation reduces the need for manual testing and enables continuous security monitoring across AI models.

Developers and security teams can use the agent to probe applications and model endpoints under realistic attack conditions. By integrating these tests into the development pipeline, teams can build safer and more reliable AI systems before deployment.

- Red Team AI by mend.io

Red Team AI by mend.io focuses on testing how AI systems react under real-world conditions. It helps teams discover weaknesses by simulating adversarial behavior rather than relying only on static checks. This practical approach offers deeper insight into how AI models perform under pressure.

The platform integrates red-team functions into the Mend AppSec environment. This makes it easier for security and development teams to align AI testing with broader application security practices. Users can view AI performance metrics, behavioral risks, and vulnerability reports in one interface.

Red Team AI supports both automated and guided testing workflows. Teams can run scenario-based assessments that reflect domain-specific risks. The platform’s data-driven methods help organizations prioritize mitigation and track improvements over time.

Its flexible deployment options suit companies evaluating generative AI, predictive analytics, or model governance controls. By connecting AI risk testing with existing security tools, Red Team AI enables consistent monitoring and quicker response to potential threats.

- OpenAI Red Teaming Toolkit

The OpenAI Red Teaming Toolkit provides organizations with structured methods to assess AI model safety and reliability. It supports both automated and human-in-the-loop testing to identify potential misuse, security flaws, or unexpected behaviors. The toolkit reflects OpenAI’s experience running internal and external evaluations for large models.

It helps teams test language, image, and multimodal systems against realistic attack scenarios. Users can design controlled experiments to observe model outputs under stress or manipulation, offering valuable insight into how systems handle edge cases and policy violations.

The toolkit also aligns with common safety frameworks, such as OWASP and NIST, which guide risk assessment and response planning. By integrating these standards, developers can track vulnerabilities across technical, ethical, and operational domains with consistent metrics.

Researchers and security professionals often use the toolkit in early development to detect issues before deployment. This approach improves transparency, builds trust, and enables more informed decisions about AI system safety.

- IBM AI Fairness 360

IBM AI Fairness 360 (AIF360) is an open-source toolkit for detecting and mitigating bias in datasets and machine learning models. It helps teams identify fairness issues before deployment, which is essential when testing AI systems for reliability and ethical compliance.

The toolkit includes a broad set of metrics to evaluate whether different groups receive fair treatment in model outcomes. It also provides algorithms that can mitigate bias without sacrificing significant model performance. These features make AIF360 valuable for red teaming exercises that test the social and ethical robustness of AI models.

Developed by IBM Research and maintained by the LF AI & Data Foundation, AIF360 supports both Python and R environments. Its flexibility enables integration with common data science workflows, allowing AI teams to examine bias as part of broader security and assurance testing.

When used in red teaming, AIF360 enables security and ethics teams to simulate real-world bias scenarios. Assessing inequities in model predictions contributes to safer, more compliant, and trustworthy AI deployments.

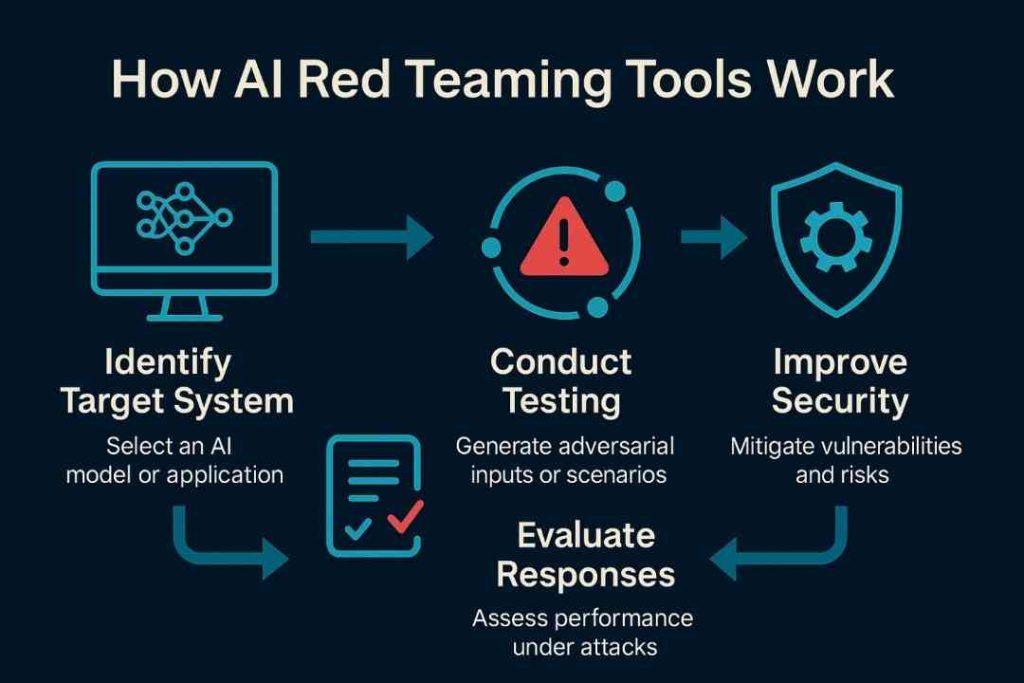

How AI Red Teaming Tools Work

AI red teaming tools use controlled, adversarial testing to reveal weaknesses in machine learning systems. They measure how well AI models detect and respond to abnormal or malicious inputs under different risk scenarios.

Simulating Adversarial Attacks

These tools simulate hostile behavior to test how AI models handle unexpected or manipulated inputs. Adversarial attacks often involve small, precise changes to data, such as altering text prompts, images, or code, to fool a model into producing incorrect or unsafe outputs.

Red teaming platforms use methods such as model inversion, prompt injection, and data poisoning to simulate real-world threats. Each test helps measure an AI model’s ability to recover or resist corruption.

Teams may run multiple iterations with different difficulty levels. For example:

- Prompt-based attacks: Modify user requests to bypass safety filters.

- Model fuzzing: Generate noisy or random data inputs to test output consistency.

- Environment simulation: Recreate external conditions that stress models during processing.

Identifying System Vulnerabilities

AI red-team tools analyze model behavior to identify security gaps and performance issues. They focus on points where the system can be tricked, biased, or coerced into outputting sensitive data.

Using evaluation metrics like precision loss, confidence drift, and response similarity, teams can pinpoint failure patterns. Reports often present results in tables showing which models or pipelines fail specific tests. For example:

| Vulnerability Type | Detection Example | Impact Level |

| Prompt Injection | Model accepts harmful or hidden prompts | High |

| Data Bias | Outputs favor one group over another | Medium |

| Output Leakage | Sensitive data revealed | Critical |

By logging each anomaly, red teaming helps developers decide what risks need patching before deployment.

Automating Threat Scenarios

Modern red teaming platforms rely on automation to manage large-scale testing. They use scripts and APIs to simulate thousands of adversarial actions without manual intervention.

Automation enables continuous stress testing, ensuring that new model versions are checked for both old and new threats. This approach supports compliance frameworks like OWASP and NIST that encourage routine testing cycles.

Some systems combine automation with human oversight. Human analysts review flagged issues that automated tools miss, improving coverage and accuracy. Together, automation and expert review create a steady process for identifying and reducing AI security risks.

Key Factors to Consider When Evaluating AI Red Teaming Tools

Selecting an AI red teaming tool requires attention to how well it fits into existing security setups, how flexibly it operates across technology environments, and how easily it can scale or adapt to new testing needs. The right combination of these traits helps organizations test AI systems effectively and respond faster to emerging risks.

Integration With Existing Security Workflows

Strong integration ensures that red teaming insights do not remain isolated from routine security operations. A tool should connect easily with standard systems such as SIEM platforms, Vulnerability Management Systems, and DevSecOps pipelines. This connection helps teams coordinate attack simulations, share alerts, and track mitigation steps without manual effort.

Key points to evaluate include:

- API compatibility with existing cybersecurity tools

- Automation hooks for scheduling and triggering tests

- Role‑based access controls to align with enterprise policies

Organizations also gain value when a tool supports clear reporting formats that feed directly into compliance dashboards or incident tracking systems. Integration that reduces friction shortens remediation cycles and makes red teaming results actionable.

Support for Multi-Cloud and Hybrid Environments

Modern AI deployments often rely on complex architectures that mix on‑premises infrastructure, private clouds, and public cloud providers. Tools built with broad environment support can simulate attacks consistently across these varied platforms.

The evaluation should focus on features such as:

| Capability | Description |

| Cross-cloud testing | Ability to assess AI models running in AWS, Azure, GCP, or regional providers |

| Secure data handling | Ensures red team data remains isolated and compliant across environments |

| Centralized management | One dashboard to monitor activities across clusters and environments |

A tool that runs independent of vendor‑specific APIs avoids locking defenses into a single ecosystem. Flexibility here prevents blind spots in AI security testing and ensures results remain reliable as infrastructure evolves.

Customization and Scalability

AI red teaming needs vary by organization size, data sensitivity, and regulatory requirements. Tools offering adjustable parameters for attack types, language models, and threat contexts help testers replicate realistic adversarial behavior.

Scalability matters when tests grow from isolated model evaluations to organization‑wide assessments. A capable tool allows teams to:

- Add new testing modules through plug‑ins

- Adjust compute resources for larger AI pipelines

- Support multiple testers or business units concurrently

Licensing and cost structures should scale flexibly as well. This approach helps organizations expand testing coverage incrementally rather than adopting an entirely new system when risk demands increase. Tools that balance customization with manageable growth better support long‑term AI security maturity.